System Hardware Requirements for MXNet in 2025

Introduction

MXNet is a scalable and efficient deep learning framework used for building and training machine learning models. Known for its flexibility and support for multiple programming languages, MXNet is widely used in applications like computer vision, natural language processing, and recommendation systems. As we approach 2025, the hardware requirements for running MXNet are expected to evolve due to the increasing complexity of models, larger datasets, and the need for faster training and inference. This blog provides a detailed breakdown of the hardware requirements for MXNet in 2025, including CPU, GPU, RAM, storage, and operating system support. Explore custom workstations at proxpc.com. We’ll also include tables to summarize the hardware requirements for different use cases.

Table of Contents

- Introduction

- Why Hardware Requirements Matter for MXNet

- CPU Requirements

- GPU Requirements

- RAM Requirements

- Storage Requirements

- Operating System Support

- Hardware Requirements for Different Use Cases

- Basic Usage

- Intermediate Usage

- Advanced Usage

- Future-Proofing Your System

- Conclusion

Why Hardware Requirements Matter for MXNet

MXNet is designed for high-performance deep learning, making it ideal for tasks like model training and inference. As models and datasets grow larger, the hardware requirements for running MXNet will increase. The right hardware ensures faster training times, efficient inference, and the ability to handle advanced AI tasks.

In 2025, with the rise of applications like autonomous systems, healthcare AI, and large-scale recommendation engines, having a system that meets the hardware requirements for MXNet will be critical for achieving optimal performance.

CPU Requirements

The CPU plays a supporting role in MXNet, handling tasks like data preprocessing, model compilation, and managing GPU operations.

Recommended CPU Specifications for MXNet in 2025

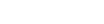

For optimal performance with MXNet in 2025, selecting the right CPU is crucial to handle various deep learning workloads efficiently. For Basic Usage, a CPU with 6 cores from either Intel or AMD, running at a clock speed of 3.0 GHz with a 12 MB cache, based on the x86-64 architecture, is recommended. This setup is suitable for small-scale models and basic deep learning tasks. For Intermediate Usage, an upgrade to an 8-core CPU with a 3.5 GHz clock speed and 16 MB of cache offers better performance, making it ideal for moderately complex models and faster processing. For Advanced Usage, especially when dealing with large datasets and intensive training tasks, a CPU with 12 cores or more, a clock speed of 4.0 GHz or higher, and a cache of 32 MB or more is recommended. This high-performance configuration ensures faster computations, efficient parallel processing, and seamless performance in large-scale machine learning projects.

For optimal performance with MXNet in 2025, selecting the right CPU is crucial to handle various deep learning workloads efficiently. For Basic Usage, a CPU with 6 cores from either Intel or AMD, running at a clock speed of 3.0 GHz with a 12 MB cache, based on the x86-64 architecture, is recommended. This setup is suitable for small-scale models and basic deep learning tasks. For Intermediate Usage, an upgrade to an 8-core CPU with a 3.5 GHz clock speed and 16 MB of cache offers better performance, making it ideal for moderately complex models and faster processing. For Advanced Usage, especially when dealing with large datasets and intensive training tasks, a CPU with 12 cores or more, a clock speed of 4.0 GHz or higher, and a cache of 32 MB or more is recommended. This high-performance configuration ensures faster computations, efficient parallel processing, and seamless performance in large-scale machine learning projects.

Explanation:

- Basic Usage: A hexa-core CPU is sufficient for small-scale MXNet tasks.

- Intermediate Usage: An octa-core CPU is recommended for medium-sized models and datasets.

- Advanced Usage: A 12-core (or more) CPU is ideal for large-scale MXNet training and inference.

GPU Requirements

GPUs are critical for accelerating computationally intensive tasks in MXNet, such as model training and inference.

Recommended GPU Specifications for MXNet in 2025

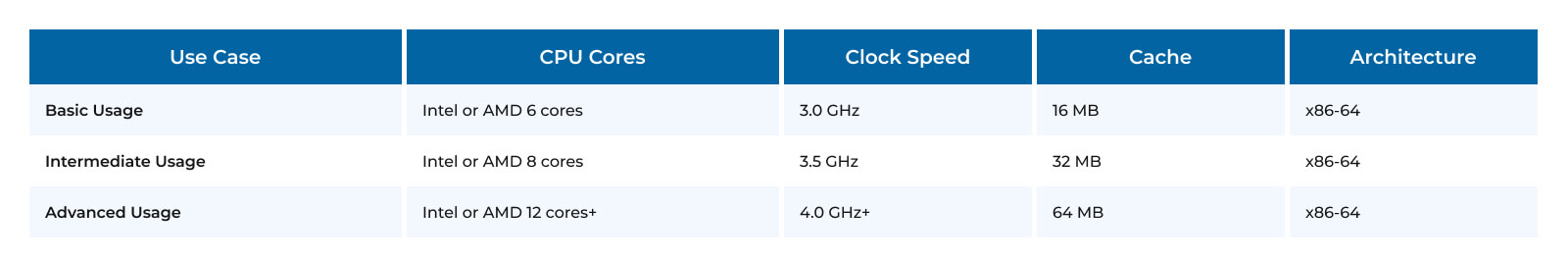

For optimal performance with MXNet in 2025, selecting the right GPU is essential to accelerate deep learning workloads effectively. For Basic Usage, the NVIDIA RTX 3060 with 12 GB of VRAM, 3584 CUDA cores, 112 Tensor cores, and a memory bandwidth of 360 GB/s is recommended. This configuration is suitable for small-scale models and entry-level deep learning tasks, providing reliable performance. For Intermediate Usage, the NVIDIA RTX 4080 offers a significant boost with 16 GB of VRAM, 9728 CUDA cores, 304 Tensor cores, and an impressive 716 GB/s memory bandwidth. This setup is ideal for handling more complex models, faster training times, and larger datasets. For Advanced Usage, particularly for large-scale deep learning projects and high-performance computing, the NVIDIA RTX 4090 is recommended. It features 24 GB of VRAM, 16384 CUDA cores, 512 Tensor cores, and an exceptional 1 TB/s memory bandwidth, ensuring seamless performance, faster computations, and efficient parallel processing for demanding AI workloads.

For optimal performance with MXNet in 2025, selecting the right GPU is essential to accelerate deep learning workloads effectively. For Basic Usage, the NVIDIA RTX 3060 with 12 GB of VRAM, 3584 CUDA cores, 112 Tensor cores, and a memory bandwidth of 360 GB/s is recommended. This configuration is suitable for small-scale models and entry-level deep learning tasks, providing reliable performance. For Intermediate Usage, the NVIDIA RTX 4080 offers a significant boost with 16 GB of VRAM, 9728 CUDA cores, 304 Tensor cores, and an impressive 716 GB/s memory bandwidth. This setup is ideal for handling more complex models, faster training times, and larger datasets. For Advanced Usage, particularly for large-scale deep learning projects and high-performance computing, the NVIDIA RTX 4090 is recommended. It features 24 GB of VRAM, 16384 CUDA cores, 512 Tensor cores, and an exceptional 1 TB/s memory bandwidth, ensuring seamless performance, faster computations, and efficient parallel processing for demanding AI workloads.

Explanation:

- Basic Usage: An NVIDIA RTX 3060 is sufficient for small to medium-sized MXNet tasks.

- Intermediate Usage: An NVIDIA RTX 4080 is recommended for larger models and real-time inference.

- Advanced Usage: An NVIDIA RTX 4090 is ideal for cutting-edge research and industrial applications.

RAM Requirements

RAM is critical for handling large datasets and model parameters during training and inference.

Recommended RAM Specifications for MXNet in 2025

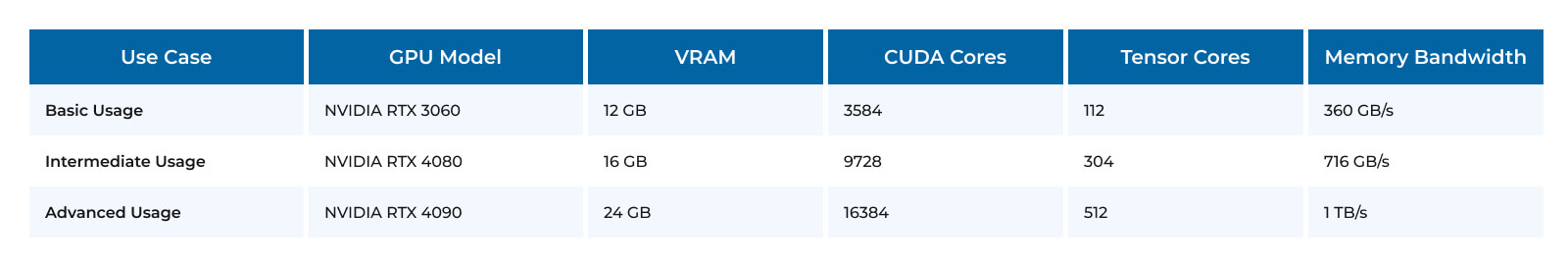

For optimal performance with MXNet in 2025, having the right RAM configuration is crucial to ensure smooth data processing and efficient model training. For Basic Usage, 16 GB of DDR4 RAM with a speed of 3200 MHz is recommended. This setup is sufficient for handling small datasets, basic machine learning models, and light workloads. For Intermediate Usage, upgrading to 32 GB of DDR4 RAM with a speed of 3600 MHz provides better performance, making it suitable for more complex models, moderate datasets, and faster training times. For Advanced Usage, especially when dealing with large-scale deep learning projects, high-resolution data, and intensive workloads, 64 GB or more of DDR5 RAM with a speed of 4800 MHz is recommended. This advanced configuration ensures faster data transfer rates, improved multitasking capabilities, and seamless performance for demanding AI applications.

Explanation:

- Basic Usage: 16 GB of DDR4 RAM is sufficient for small-scale MXNet tasks.

- Intermediate Usage: 32 GB of DDR4 RAM is recommended for medium-sized models and datasets.

- Advanced Usage: 64 GB or more of DDR5 RAM is ideal for large-scale MXNet training and inference.

Storage Requirements

Storage speed and capacity impact how quickly data can be loaded and saved during training and inference.

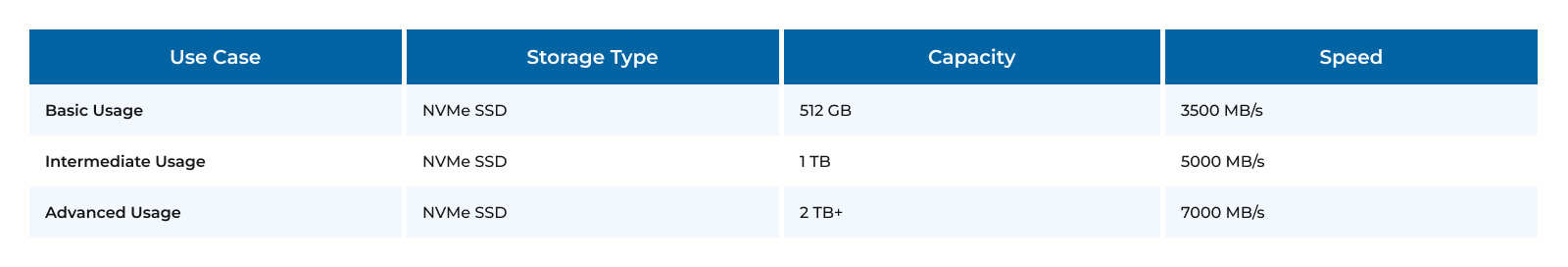

Recommended Storage Specifications for MXNet in 2025

For optimal performance with MXNet in 2025, choosing the right storage is essential to handle large datasets, fast read/write operations, and smooth model training. For Basic Usage, an NVMe SSD with a capacity of 512 GB and a speed of 3500 MB/s is recommended. This setup is suitable for small projects, basic machine learning models, and general data storage needs, offering quick boot times and faster data access compared to traditional drives. For Intermediate Usage, upgrading to a 1 TB NVMe SSD with a speed of 5000 MB/s provides better performance, making it ideal for managing larger datasets, faster model loading, and improved data processing speeds. For Advanced Usage, especially for large-scale AI projects, extensive datasets, and high-performance computing tasks, an NVMe SSD with a capacity of 2 TB or more and a speed of 7000 MB/s is recommended. This configuration ensures ultra-fast data transfer rates, reduced latency, and seamless performance for even the most demanding deep learning workloads.

Explanation:

- Basic Usage: A 512 GB NVMe SSD is sufficient for small datasets.

- Intermediate Usage: A 1 TB NVMe SSD is recommended for medium-sized datasets.

- Advanced Usage: A 2 TB or larger NVMe SSD is ideal for large datasets and high-speed data access.

Operating System Support

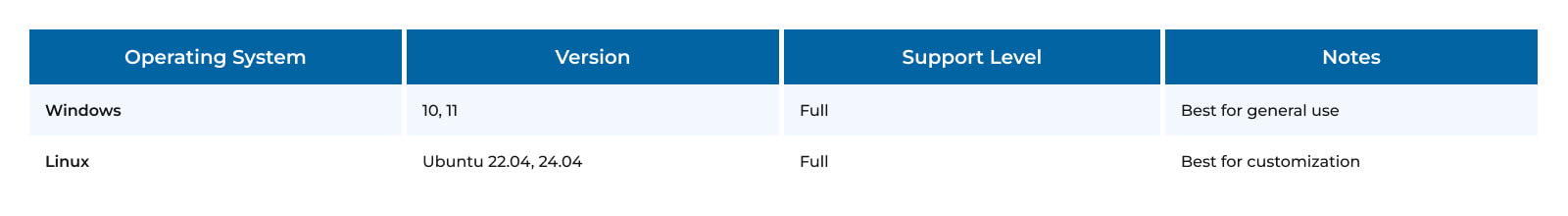

MXNet is compatible with major operating systems, but performance may vary.

Operating System Support for MXNet in 2025

For optimal MXNet performance in 2025, the operating system plays a key role. Windows 10 and 11 offer full support, making them ideal for general use, providing user-friendly interfaces and broad software compatibility. For more flexibility and advanced configurations, Linux distributions like Ubuntu 22.04 and 24.04 also offer full support, making them the best choice for customization, performance tuning, and development environments. Both operating systems ensure stability, security, and efficient resource management for MXNet applications.

For optimal MXNet performance in 2025, the operating system plays a key role. Windows 10 and 11 offer full support, making them ideal for general use, providing user-friendly interfaces and broad software compatibility. For more flexibility and advanced configurations, Linux distributions like Ubuntu 22.04 and 24.04 also offer full support, making them the best choice for customization, performance tuning, and development environments. Both operating systems ensure stability, security, and efficient resource management for MXNet applications.

Explanation:

- Windows: Fully supported and ideal for general use.

- Linux: Fully supported and ideal for advanced users who need customization.

Hardware Requirements for Different Use Cases

Basic Usage

For small-scale MXNet tasks:

- CPU: Intel or AMD 6 cores, 3.0 GHz

- GPU: NVIDIA RTX 3060, 12 GB VRAM

- RAM: 16 GB DDR4

- Storage: 512 GB NVMe SSD

- OS: Windows 10, Ubuntu 22.04

Intermediate Usage

For medium-sized models and real-time inference:

- CPU: Intel or AMD 8 cores, 3.5 GHz

- GPU: NVIDIA RTX 4080, 16 GB VRAM

- RAM: 32 GB DDR4

- Storage: 1 TB NVMe SSD

- OS: Windows 11, Ubuntu 24.04

Advanced Usage

For cutting-edge research and industrial applications:

- CPU: Intel or AMD 12 cores+, 4.0 GHz+

- GPU: NVIDIA RTX 4090, 24 GB VRAM

- RAM: 64 GB+ DDR5

- Storage: 2 TB+ NVMe SSD

- OS: Windows 11, Ubuntu 24.04

Future-Proofing Your System

To ensure your system remains capable of running MXNet efficiently in 2025 and beyond:

- Invest in a Multi-Core CPU: A CPU with multiple cores and high clock speeds will handle future demands.

- Upgrade to DDR5 RAM: DDR5 offers higher speeds and better efficiency.

- Use NVMe SSDs: NVMe SSDs provide faster data access for large datasets.

- Consider High-End GPUs: A powerful GPU is essential for accelerating MXNet computations.

- Keep Your OS Updated: Regularly update your operating system for compatibility with the latest MXNet versions.

Conclusion

As we move toward 2025, the hardware requirements for running MXNet will continue to evolve. By ensuring your system meets these requirements, you can achieve optimal performance and stay ahead in the field of deep learning and AI.

Whether you’re a beginner, an intermediate user, or an advanced researcher, the hardware specifications outlined in this blog will help you build a system capable of running MXNet efficiently and effectively. Future-proof your setup today to handle the demands of tomorrow!

Also Read:

Shubham Kumar

As a Frontend Developer at ProX PC, Shubham specializes in building high-performance web applications using React.js and Next.js. By prioritizing clean, scalable code and efficient API integrations, he ensures every project remains maintainable and robust.

Share this: