GPU Hardware Requirements Guide for DeepSeek Models in 2025

DeepSeek models continue to push the boundaries of large language model (LLM) performance, offering unparalleled capabilities across various domains. However, their computational demands remain substantial, necessitating careful hardware selection. This guide provides updated system requirements, including VRAM estimates, GPU recommendations, and optimization strategies for all DeepSeek model variants in 2025, along with insights into ProX PC’s GPU servers and workstations tailored for these needs. Explore custom workstations at proxpc.com

Key Factors Influencing Hardware Requirements

The hardware demands of DeepSeek models depend on several critical factors:

- Model Size: Larger models with more parameters (e.g., 7B vs. 671B) require significantly more VRAM and compute power.

- Quantization: Techniques such as 4-bit integer precision and mixed precision optimizations can drastically lower VRAM consumption.

- Parallelism & Distribution: High-parameter models may require multi-GPU setups or data parallelism strategies to function optimally.

ProX PC: Empowering AI with High-Performance Hardware

ProX PC offers cutting-edge GPU servers and workstations designed to meet the demanding requirements of AI workloads like DeepSeek models. With a strong emphasis on reliability, scalability, and efficiency, ProX PC provides:

GPU Servers

- High-Density Multi-GPU Configurations: Servers supporting up to 8 NVIDIA H100 or A100 GPUs, ideal for large-scale training and inference tasks.

- Advanced Cooling Solutions: Efficient thermal management to ensure sustained performance under heavy workloads.

-

Centralized Management: Integrated with ProX PC’s centralized portal for ticketing, monitoring, and maintenance, providing a hassle-free experience.

Check out our ProX AI SuperServer, designed for scalable AI deployments.

Workstations

- Creator and Developer-Friendly Systems: Equipped with GPUs like NVIDIA RTX 4090 or RTX 6000 Ada for prototyping and medium-scale training.

- Compact Yet Powerful: Optimized for smaller labs and individual researchers needing high performance without server-scale hardware.

-

Customizable Configurations: Tailored to specific DeepSeek model variants and use cases.

Explore our ProX AI Workstation, perfect for researchers and developers.

- Model Inferencing Workstation

By offering a one-stop solution, ProX PC ensures smooth deployment and management for AI enthusiasts, researchers, and enterprises alike.

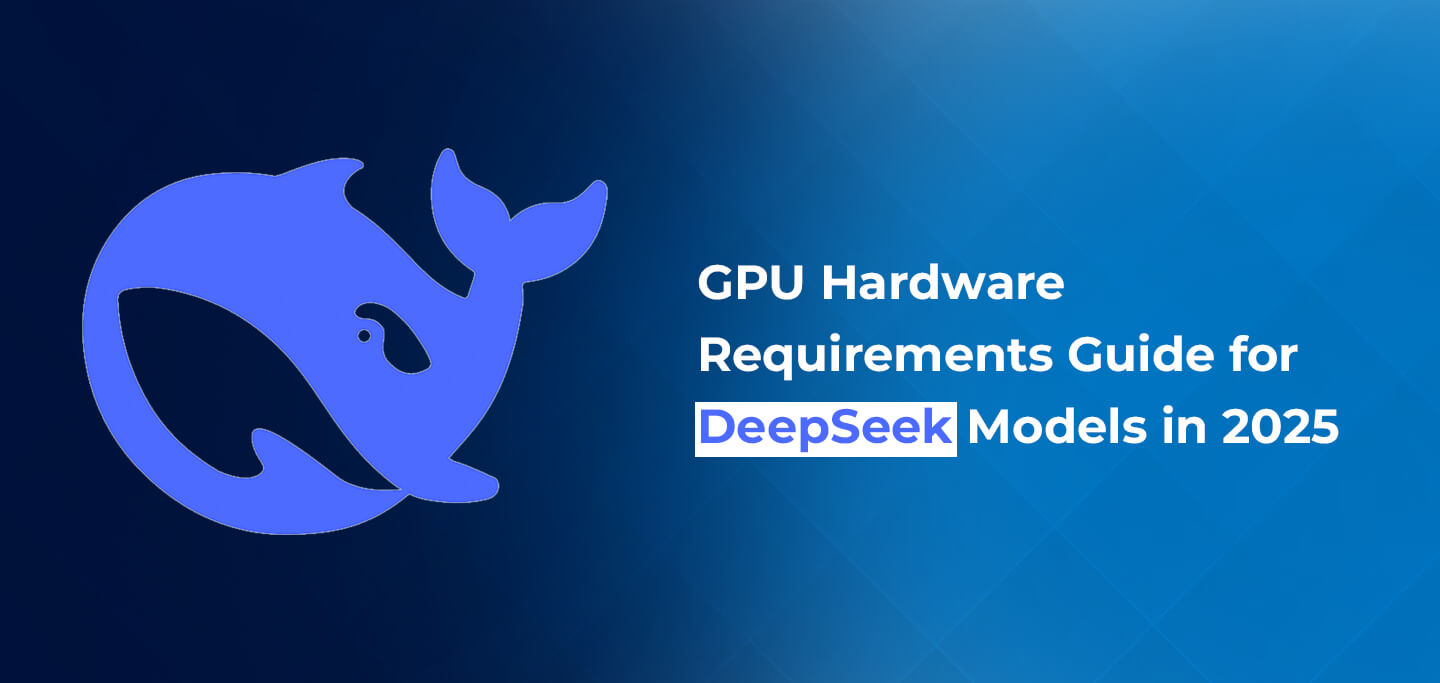

VRAM Requirements for DeepSeek Models

| Model Variant | FP16 Precision (VRAM) | 4-bit Quantization (VRAM) |

|---|---|---|

| 7B | ~14 GB | ~4 GB |

| 16B | ~30 GB | ~8 GB |

| 100B | ~220 GB | ~60 GB |

| 671B | ~1.2 TB | ~400 GB |

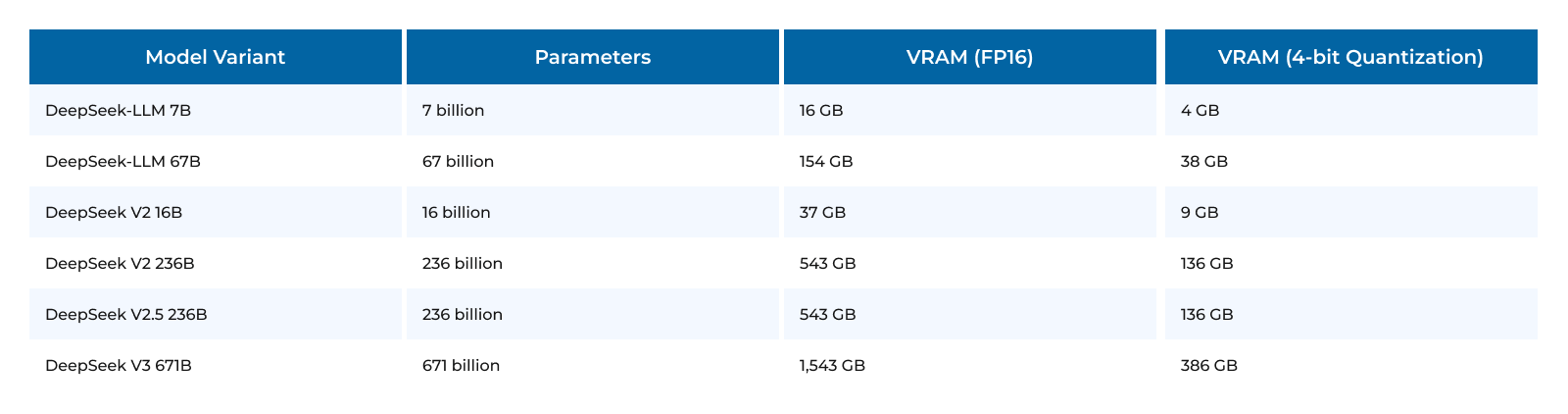

Recommended GPUs for DeepSeek Models

Based on VRAM requirements, the following GPUs are recommended:

| Model Variant | Consumer GPUs | Data Center GPUs |

| 7B | NVIDIA RTX 4090, RTX 6000 | NVIDIA A40, A100 |

| 16B | NVIDIA RTX 6000, RTX 8000 | NVIDIA A100, H100 |

| 100B | N/A | NVIDIA H100, H200 (multi-GPU setups) |

| 671B | N/A | NVIDIA H200 (multi-GPU setups) |

Performance Comparison of GPU Hardware

| GPU Model | VRAM | FP16 TFLOPS | Efficiency (DeepSeek) |

| RTX 4090 | 24 GB | 82.6 | Best for 7B models |

| RTX 6000 (Ada) | 48 GB | 91.1 | Ideal for 7B-16B |

| A100 | 40/80 GB | 78 | Enterprise-grade for 16B-100B |

| H100 | 80 GB | 183 | Optimal for 100B+ |

| H200 (2025) | 100 GB | 250 | Best for 671B |

Optimizing Performance for Large-Scale Models

To efficiently deploy DeepSeek models, consider these practical optimization techniques:

- Mixed Precision Operations

Using lower-precision formats such as FP16 or INT8 can dramatically reduce VRAM consumption without significant performance degradation. GPUs with Tensor Cores (e.g., NVIDIA H100, H200) excel at mixed precision. - Gradient Checkpointing

This method reduces memory usage by storing fewer activation states, lowering VRAM requirements while slightly increasing computation time. - Batch Size Adjustments

Smaller batch sizes reduce memory usage but can affect throughput. Balance batch size and performance based on available resources. - Distributed Processing & Model Parallelism

For models exceeding 100B parameters, leverage data or model parallelism across multiple GPUs. Techniques like tensor parallelism and pipeline parallelism ensure scalability and efficient execution.

Conclusion

DeepSeek models continue to advance AI capabilities, but their hardware requirements demand careful planning. ProX PC’s range of GPU servers and workstations offers the perfect balance of power, scalability, and support to meet these demands.

For smaller models (e.g., 7B and 16B), consumer GPUs like the NVIDIA RTX 4090 provide a cost-effective solution. Larger models necessitate data center-grade GPUs such as the H100 or H200, often in multi-GPU configurations. By choosing the right hardware and applying optimization strategies, you can deploy DeepSeek models efficiently at any scale in 2025.

Explore more about our products, such as the ProX AI Edge Server and ProX Scalable Rack Solutions, to elevate your AI deployment.

Also Read:

- GPU Hardware Requirement Guide for Llama 3 in 2025

- PyTorch vs TensorFlow: Comparative Analysis of AI Frameworks

- TensorFlow Lite – Real-Time Computer Vision on Edge Devices

- What are the minimum PC hardware requirements for Autodesk AutoCAD?

- Benchmarking with TensorRT-LLM

Share this: