HIGH PERFORMANCE AI COMPUTING GPU SERVER

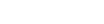

8X NVIDIA H100

Tensor Core SERVER

NVIDIA H100 SERVER

The Pro Maestro NVIDIA H100 Series delivers unprecedented performance, scalability, and security for the world’s most demanding workloads. Built on the NVIDIA Hopper™ architecture, this system features the dedicated Transformer Engine, designed to speed up trillion-parameter language models. Whether you require the raw throughput of the 8-GPU SXM5 configuration for massive training runs or the high-memory capacity of the H100 NVL for heavy inference, the Pro Maestro series bridges the gap between data center and discovery.

Unprecedented acceleration for the world’s most demanding AI and machine learning workloads

NVIDIA MAESTRO H100

8 x NVIDIA H100 80 GB SXM

Upto 3TB RDIMM (2R) or

Upto 16TB RDIMM-3DS (2S8Rx4)

Supports 5th and 4th Gen Intel® Xeon® Scalable Processors

upto 128 cores / 256 threads @ 4.1GHz

upto 400 G Network

Availability

8X NVIDIA H100 GPU Server

Key Features

- Ready-to-ship

- Optimal Price

- Fast & Stable Connectivity

Why Choose the "Hopper" H100 GPU?

10x faster terabyte-scale accelerated computing

4X Faster Training on LLMs

Compared to previous generation A100, utilizing FP8 precision and the fourth-generation Tensor Cores.30X Faster Inference

On massive Mixture-of-Experts (MoE) models, delivering real-time response latency for applications like Chatbots and Copilots.7X Higher Performance

For double-precision (FP64) vector and matrix HPC applications such as molecular dynamics and climate simulation.NVIDIA H100

Specifications

| Feature | Pro Maestro H100 SXM (HGX) | Pro Maestro H100 NVL (PCIe) |

|---|---|---|

| GPU Architecture | NVIDIA Hopper™ SXM5 | NVIDIA Hopper™ NVL (Dual-Slot PCIe) |

| GPU Memory | 80GB HBM3 per GPU | 94GB HBM3 per GPU (188GB per Pair) |

| Memory Bandwidth | 3.35 TB/s | 7.8 TB/s (Combined Pair) |

| Interconnect | 900 GB/s NVLink | 600 GB/s NVLink Bridge |

| TF32 Tensor Core | 989 TFLOPS | 1,671 TFLOPS (Combined Pair) |

| FP8 Tensor Core | 3,958 TFLOPS | 7,916 TFLOPS (Combined Pair) |

| Server Configurations | 8× GPU HGX System | 4× GPU & 10× GPU PCIe Systems |

| Ideal Workload | Foundation Model Training, Digital Twins | LLM Inference (RAG), Fine-Tuning |

Primary Use Cases

High Performance Computing

Unprecedented computational power for scientific research and simulations with large datasets and intricate calculations.

Deep Learning Training

Enabling faster and more accurate deep learning tasks for rapid advancements in artificial intelligence.

Language Processing

Empowering applications for tasks like sentiment analysis and language translation with remarkable precision.

Conversational AI

Enhancing the processing speed and efficiency of chatbots and virtual assistants for more engaging user experiences.