System Requirements for Artificial Intelligence in 2025

System Requirements for Artificial Intelligence

As artificial intelligence (AI) continues to evolve rapidly, the demands on the hardware and software systems that power these innovations are also increasing. In 2025, the world of AI will require even more advanced systems like, AI Workstations to meet the growing complexity and scale of AI applications. The requirements for AI systems will depend on the specific use case, whether it's deep learning, natural language processing, computer vision, or edge AI. In this blog, we’ll break down the essential system components needed to support AI tasks, the trends shaping AI hardware, and the role of these systems in powering AI breakthroughs.

Hardware Requirements

The hardware and software requirements for AI in 2025 will be driven by several key components. These include the central processing unit (CPU), graphics processing unit (GPU), memory (RAM), storage, and specialized accelerators for machine learning tasks. Additionally, the operating system, network, and cooling solutions are essential to ensure smooth operations and optimal performance for AI workloads.

1. CPU:

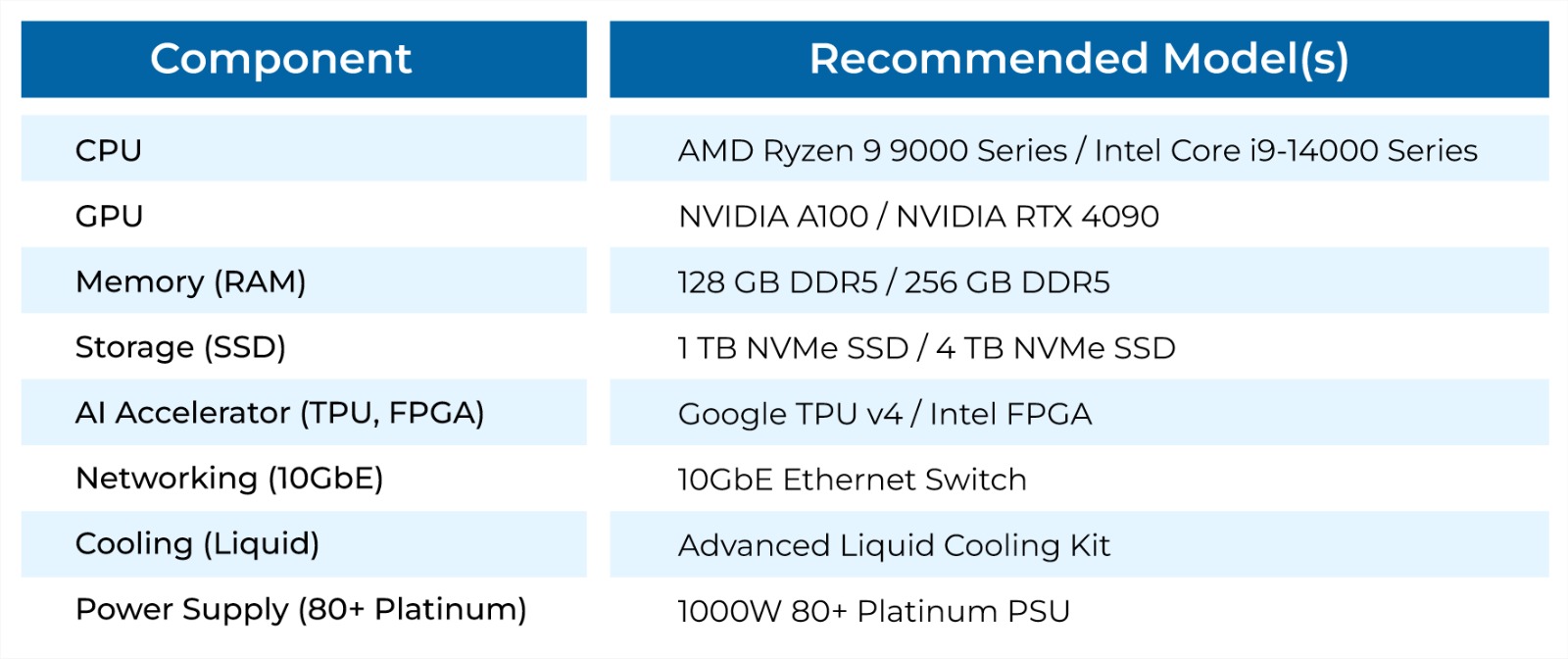

The CPU is the backbone of any AI system. While AI processing often shifts more focus to GPUs and specialized accelerators, the CPU still plays a critical role in handling general-purpose tasks, managing system operations, and performing certain AI computations. In 2025, the CPU will need to support AI workloads that require both high performance and efficiency.

Key Features for AI in 2025:

- Multi-core Architecture: AI systems will demand CPUs with multiple cores to handle simultaneous tasks and parallel processing efficiently.

- High Clock Speeds: Faster clock speeds will be essential for reducing processing time for AI applications, especially in training machine learning models.

- Energy Efficiency: With the increasing demand for AI processing, energy-efficient CPUs will be crucial to maintaining a balance between performance and power consumption.

Recommended CPUs for AI in 2025:

- AMD Ryzen 9 8000 Series – A multi-core powerhouse with superior clock speeds and efficiency.

- Intel Core i9-14000 Series – Intel’s upcoming 14th-generation CPUs with increased core counts and higher performance per watt.

- ARM-based Processors – ARM chips are expected to play a larger role in edge AI applications due to their energy efficiency.

2. GPU:

In 2025, the GPU will continue to be the primary hardware component for training and inference in AI systems. Graphics processing units are designed to perform parallel computations, making them ideal for deep learning tasks that require handling large volumes of data.

Key Features for AI in 2025:

- Tensor Cores for Deep Learning: Tensor cores, found in NVIDIA's GPUs, are optimized for machine learning tasks and are critical for handling deep neural networks.

- High Memory Bandwidth: AI workloads require GPUs with high memory bandwidth to quickly transfer data between the GPU's memory and cores.

- CUDA Cores: NVIDIA's CUDA cores enable parallel processing for AI tasks, accelerating model training and inference.

Recommended GPUs for AI in 2025:

- NVIDIA A100 Tensor Core GPU – The A100 offers exceptional performance for AI and machine learning tasks, especially in data centers and high-performance computing.

- NVIDIA RTX 4090 GPU – The next-generation RTX GPU offers cutting-edge architecture for AI model training and inference.

- AMD Radeon Instinct MI300 – AMD’s GPUs will continue to gain traction for AI workloads, offering competitive performance at a lower cost.

3. Memory (RAM)

AI workloads, especially deep learning tasks, require a significant amount of memory to store data, intermediate results, and large models. In 2025, systems will need to support large-scale memory configurations to efficiently manage AI data.

Key Features for AI in 2025:

- High Bandwidth Memory (HBM): AI systems will require RAM with high bandwidth to reduce bottlenecks in data processing.

- Large Capacity: Models are becoming larger and more complex, requiring systems with ample RAM (64 GB or more) to handle demanding AI workloads.

- DDR5 and Beyond: DDR5 memory is expected to be widely used in AI systems for faster data transfers and improved efficiency.

Recommended RAM for AI in 2025:

- 128 GB DDR5 RAM – Ideal for handling large datasets and running multiple AI models concurrently.

- 256 GB DDR5 RAM – For ultra-demanding AI applications that require memory-intensive computations, especially in training large models.

4. Storage

AI systems generate and process vast amounts of data, making storage a critical component. In 2025, storage requirements will continue to increase as datasets grow in size and complexity. The storage system needs to be fast, reliable, and scalable.

Key Features for AI in 2025:

- High-speed SSDs: Solid-state drives (SSDs) will continue to dominate due to their high read/write speeds, which are crucial for data-heavy AI applications.

- Storage Capacity: AI datasets and models can occupy terabytes of storage, so systems will need to support multi-terabyte configurations.

- NVMe Storage: Non-volatile memory express (NVMe) storage will be the standard for AI applications due to its low latency and high throughput.

Recommended Storage for AI in 2025:

- 1 TB NVMe SSD – For fast data access and lower latency in AI applications.

- 4 TB or larger NVMe SSD – To handle large datasets and multi-model AI operations.

5. Specialized AI Accelerators

As AI workloads continue to evolve, specialized accelerators will become more prevalent. These include dedicated AI chips like Google’s Tensor Processing Unit (TPU) or custom FPGAs (Field-Programmable Gate Arrays), which are optimized for specific machine learning tasks.

Key Features for AI in 2025:

- Tensor Processing Units (TPUs): TPUs are designed for accelerating the training and inference of deep learning models and are particularly useful in large-scale AI applications.

- Field-Programmable Gate Arrays (FPGAs): FPGAs are customizable hardware accelerators that can be tailored for specific AI workloads, offering low latency and high performance.

- AI Co-Processors: These co-processors can offload specific tasks from the CPU and GPU, providing specialized performance for machine learning algorithms.

Recommended AI Accelerators for AI in 2025:

- Google TPU v4 – For large-scale AI model training, especially for deep learning models.

- Intel FPGA for AI Workloads – To accelerate specialized tasks in machine learning algorithms.

- NVIDIA TensorRT – For optimized inference and acceleration of neural networks.

6. Networking and Connectivity

In AI systems, especially in multi-node configurations, networking plays a critical role in ensuring fast communication between components and systems. With the rise of distributed AI and edge computing, high-speed networking is essential for efficient data transfer.

Key Features for AI in 2025:

- 10GbE/40GbE Networking: High-speed Ethernet connections will be essential for data centers and multi-node AI systems.

- Low Latency: AI systems need low-latency networks to ensure real-time data processing and decision-making.

- Wireless Connectivity for Edge AI: In edge AI applications, Wi-Fi 6, 5G, and other advanced wireless technologies will enable seamless communication between devices.

Recommended Networking for AI in 2025:

- 10GbE/40GbE Ethernet Switches – For large-scale AI infrastructures.

- Wi-Fi 6 and 5G for Edge AI – For efficient and fast communication in edge AI setups.

7. Cooling and Power Efficiency

As AI systems become more powerful, the demand for effective cooling solutions will grow. The heat generated by high-performance CPUs, GPUs, and specialized accelerators can reduce system efficiency and shorten component lifespan.

Key Features for AI in 2025:

- Advanced Cooling Solutions: Liquid cooling, enhanced heat sinks, and active cooling systems will be essential for maintaining optimal temperatures in AI systems.

- Power Efficiency: Energy-efficient power supplies and components will be critical in balancing performance with energy consumption, especially in large-scale AI data centers.

Recommended Cooling and Power Solutions:

- Liquid Cooling Systems – For high-performance workstations and data centers.

- Power Supplies with High Efficiency Ratings – 80+ Platinum or Titanium rated power supplies to ensure energy efficiency.

8. Operating System and Software

The operating system and software layer play a crucial role in managing AI workloads. In 2025, AI systems will require software that can support multi-threading, parallel processing, and optimized AI frameworks.

Key Features for AI in 2025:

- Linux-based OS: Linux will continue to be the preferred OS for AI applications due to its flexibility, open-source nature, and support for powerful machine learning libraries.

- AI Frameworks: Software frameworks like TensorFlow, PyTorch, and Apache MXNet will evolve to take advantage of new hardware and accelerate AI workloads.

- Edge Computing Platforms: Software that facilitates edge computing, such as NVIDIA Jetson or Intel OpenVINO, will be essential for deploying AI on small devices.

Table for AI System Requirements

Explore All AI Workstation at proxpc.com

Conclusion

As AI continues to advance, the hardware and system requirements for AI applications will become more demanding. By 2025, AI systems will require state-of-the-art CPUs, GPUs, memory, storage, accelerators, and networking components to handle the growing complexity of machine learning models, data processing, and real-time decision-making. Whether for edge AI applications, deep learning research, or large-scale AI deployments, understanding the system requirements and selecting the right components will be crucial for optimizing performance and ensuring the success of AI projects.

By staying ahead of these technological advancements and investing in the right hardware, organizations can build the AI infrastructure needed to thrive in the increasingly AI-driven world of 2025.

For more info visit www.proxpc.com

Read More Related Topics:

Shubham Kumar

As a Frontend Developer at ProX PC, Shubham specializes in building high-performance web applications using React.js and Next.js. By prioritizing clean, scalable code and efficient API integrations, he ensures every project remains maintainable and robust.

Share this: