What are LLMs?

Large Language Models are transformer networks pretrained on vast text corpora to learn next‑token prediction, then refined via instruction tuning and preference alignment to become helpful and safe assistants. They power summarization, generation, code assistance, RAG, and tool‑using agents across domains.

What are VLMs?

Vision‑Language Models align image features and text tokens in a shared space using dual encoders like CLIP or fusion encoders for tighter reasoning, enabling captioning, visual QA, multimodal retrieval, and image‑aware RAG.

Why Pro Maestro 4‑GPU

-

Compute: Up to 4× 600W GPUs for accelerated FP16/BF16 training and high‑throughput inference.

-

Memory and I/O: Up to 4 TB DDR5 ECC, 125 TB flash for datasets/checkpoints, and 200 TB HDD for cold storage.

-

Fabric: Up to 400 Gb/s networking for distributed jobs, object stores, and vector indexes. This balance lets teams fine-tune 7B–13B models efficiently, run PEFT adapters at scale, and train compact VLMs while keeping sensitive data inside the perimeter.

-

LLM training in three phases

-

Pretraining: Optional on proprietary text to encode domain patterns when public checkpoints are insufficient.

-

Supervised instruction tuning: Curate prompt→response pairs in JSONL for task behaviour and format consistency.

-

Preference alignment: Use preference pairs or ratings to reduce undesired behaviours and improve response helpfulness.

-

Parameter‑efficient fine‑tuning (PEFT)

-

LoRA/QLoRA: Insert low‑rank adapters on attention/projection layers and train a tiny fraction of parameters with 4‑bit or 8‑bit base weights to cut VRAM and time.

-

When to use full fine‑tune: Safety‑critical domains with large, clean datasets or when adapters plateau on accuracy.

-

Practical defaults: r=8–64, alpha=16–64, target modules q_proj,k_proj,v_proj,o_proj; learning rate 2e‑4 to 2e‑5; 1–3 epochs with early stopping.

-

VLM training essentials

-

Architectures:

-

Dual encoders for fast retrieval and scalable indexing.

-

Fusion encoders for step‑by‑step reasoning in captioning/VQA.

-

Objectives: Contrastive loss on image–text pairs, captioning loss for generative quality, and masked modelling for robustness.

-

Metrics: Recall@K for retrieval, CIDEr/BLEU for captioning, and QA accuracy for VQA.

-

Multimodal use cases built on Pro Maestro

-

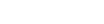

Auto‑captioning and dataset bootstrapping: Start with a captioning VLM to generate initial captions for raw image collections, then human‑verify to reach training quality.

-

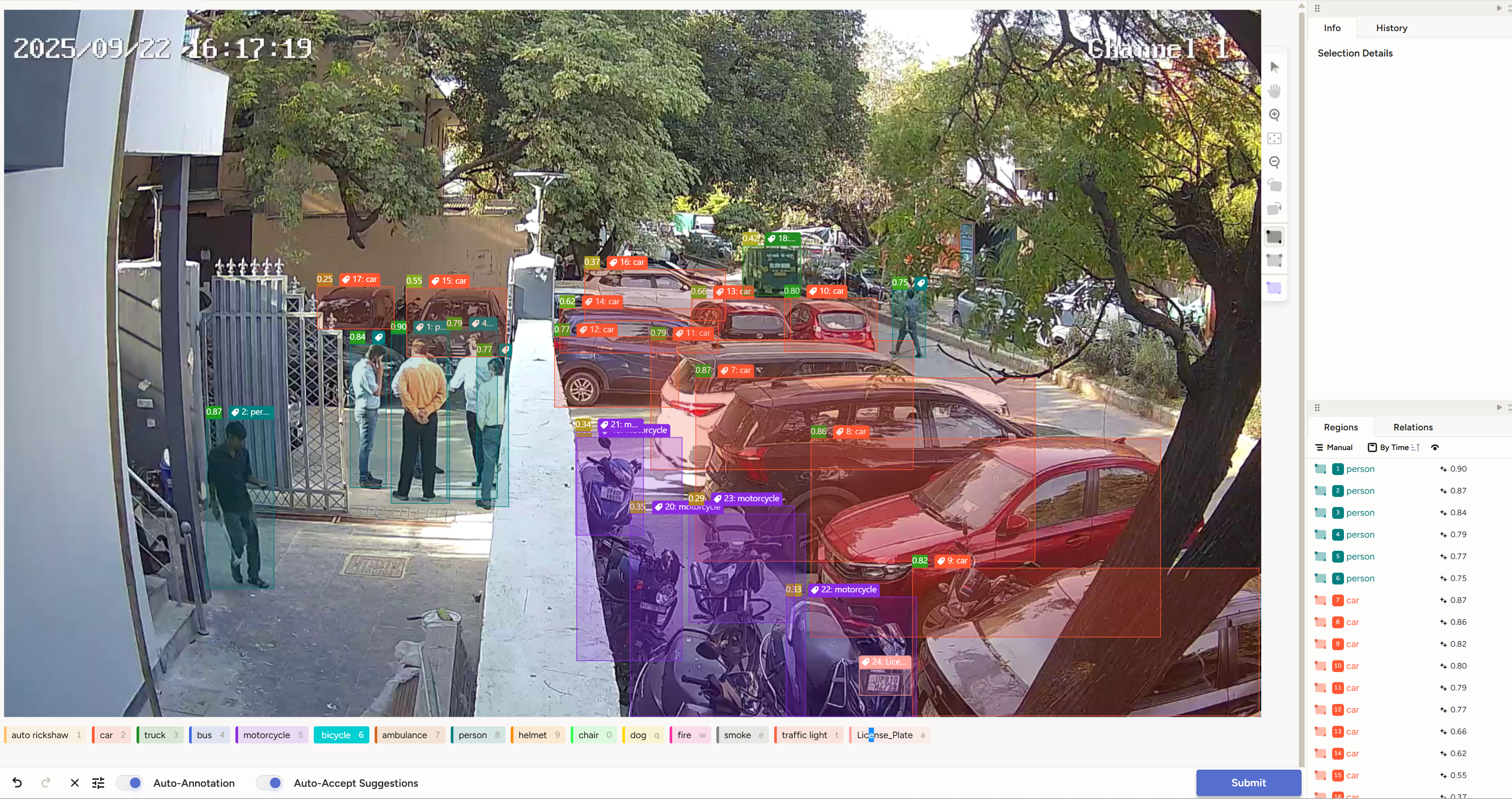

Visual analytics and search: Dual‑encoder VLM embeddings with a vector DB enable text→image and image→text search for CCTV, retail, and media archives.

-

Domain LLMs with tools: Finely tuned assistants for SOPs, code, and document QA incorporating tool calling and retrieval.

-

Preparing datasets on multi‑GPU

-

Image captioning dataset

-

Collect and deduplicate images: store paths and hashes.

-

Generate draft captions with a base VLM: normalize style and vocabulary; add hard negatives for retrieval.

-

Curate JSONL: {image_path, caption, tags, split};

Computer vision dataset

Use detector proposals to seed boxes/masks; human‑verify in batches.

Maintain COCO‑style splits with versioned annotations; record augmentations and camera metadata.

4‑GPU training recipes

LLM LoRA quickstart

Load a 7B:13B base model in 4‑bit, attach LoRA on attention projections, and train with DDP across 4 GPUs using gradient accumulation to simulate larger global batches.

Knobs to watch sequence length vs tokens/s, grad checkpointing, cosine LR with warmup, and fp16 loss scaling.

Validation Maintain a held‑out instruction set; measure task accuracy, toxicity, latency, and tokens/s for serving

VLM contrastive+captioning quickstart

Pair a ViT‑B/16 or similar image encoder with a lightweight text encoder; train contrastive loss with temperature scaling; optionally add captioning head for generative tasks.

Mixed precision and fused ops keep utilization high; accumulate gradients to stabilize large batch contrastive learning.

Validate retrieval with Recall@1/5/10 and captioning with CIDEr; visually inspect failure clusters and mined hard negatives.

Deployment patterns

On‑prem LLM serving

Quantize to INT4/8 where suitable; shared tensors across 4 GPUs; enable paged attention to maximize throughput at long context.

Autoscale replicas via process managers; use request batching and KV‑cache reuse to lift tokens/s per GPU.

VLM and vector search

Encode the image corpus with the VLM’s image tower and text queries with the text tower; index in a vector DB; use re‑rankers for quality.

For captioning/VQA, deploy the fusion model with a small beam‑search or nucleus sampling head and add prompt templates for consistency.

RAG and multimodal retrieval

Document chunking with semantic titles; multimodal stores that index screenshots, figures, and diagrams next to text chunks; hybrid retrieval (dense + lexical) for recall.

Tool integration: OCR for scanned PDFs, layout parsers, and metadata enrichers to improve grounding.

Observability and reliability

Track accuracy drift, safety regressions, and cost per 1K tokens or per image; log prompts, responses, and image IDs with privacy guards.

Shadow new checkpoints, A/B test against the live model, and gate rollout with business KPIs.

Power, storage, and networking

Power and thermals: Budget for up to four 600W GPUs; size PSUs and cooling accordingly; monitor inlet temp and hotspot deltas.

Storage layout: NVMe flash tiers for datasets and checkpoints; HDD arrays for cold archives; periodic snapshotting and checksum audits.

Fabric: 100–400 Gb/s links enable fast dataset streaming, multi‑node scale‑out, and low‑latency vector search across racks.

Final Word

If you’re looking to scale your workflow, speed up production, or handle heavier projects without compromise, you can check out our Pro Maestro Series

A range of purpose-built systems designed as real solutions to real professional challenges. We’re a solution-oriented brand, focused on building machines that make your work faster, smoother, and more reliable.

Pro Maestro GQ (4 GPU Server)

A compact powerhouse for 3D rendering, animation, and AI prototyping. Ideal for small studios and creators who need high performance in limited space.

Pro Maestro GE (8 GPU Server)

An exclusive in India, supporting the latest GeForce RTX 5090 series GPUs. Built for AI training, deep learning, simulation, and high-end VFX — the kind of system serious professionals rely on for heavy compute workloads.

Pro Maestro GD (10 GPU Server)

Our most advanced dense-architecture system, optimized for LLM training, large-scale simulation, and enterprise-grade rendering. Perfect for research institutions and data centers pushing performance limits.

From AI and VFX to simulation and generative workloads. The Pro Maestro Series turns complex computing into effortless performance.

Pro Maestro Series — For Professionals, By Professionals.